Will GDPR Kill Risk-Based Authentication?

No, I’m not declaring another thing in identity management dead. Instead, I’d like you to join me in exploring something that has been bugging me quite a bit lately.

Risk-based Authentication can cover a spectrum of capabilities, but most generically it is a passive authentication factor that tries to measure the risk of a particular interaction (transaction, request for access, etc), and determine if the authentication done so far is sufficient, or it needs to be supplemented by additional challenges. It will typically take into account various pieces of information that can be gathered silently (in other words, working behind the scenes without forcing the user to do anything explicitly or even realize anything is happening) from the context of the interaction. Examples of information used would be information about the device, about the environment (like IP address, time of day, geolocation), about the user behavior (way they are holding the device, typing speed), and the transaction itself (checking balances or moving money, buying goods above a certain amount or of a certain type). At a high level, risk-based authentication will return a risk score that the enterprise needs to understand and determine if the risk score is above a threshold that requires that they invoke an additional active authentication factor (like a SMS-based OTP or an authenticator code).

One of the reasons why risk-based authentication has been so attractive over the last few years is that it is being considered a second factor for authentication that doesn’t negatively impact the user experience because in most cases it doesn’t require the user to do anything beyond their first factor (enter their password, or supply their biometric like TouchID). One of the reasons it has been so challenging for enterprises is that by design it is a heuristic approach to authentication (heuristic = not guaranteed to be optimal or perfect, but sufficient for the immediate goals). That means that customers have to deal with false positives that either outright block legitimate users, or challenge them for additional factors more often than is considered acceptable.

One of the solutions to this challenge has been the cloud. More specifically, SaaS solutions that provide risk-based authentication as a service. That’s because these products have the ability to address the main requirement for improving the efficiency of these algorithmic approaches – huge amounts of current and historical data gathered across all their customers. These services take advantage of the network effects of the cloud by building up vast databases that can help them scale beyond the data they would get from any one individual customer, and avoiding the bootstrap problem (the initial deployment of a standalone solution that has no historical information to go by). It’s why they can give a reasonably well calculated risk score for a new/newer user of customer organization A, because they may already know something about that user based on that persons prior interactions at customer organization, not only do they have to worry about their customers but third party suppliers must have gdpr compliance. B. But therein lies the dilemma.

You Only Thought You Could Avoid Talking About GDPR

I resisted as long as I could. As a privacy wonk, it’s been hard to not pay attention to the impact of GDPR on our industry. If you were at the RSA Conference a few weeks ago, you couldn’t avoid walking 20 steps or going 10 minutes without hearing the acronym. In fact, there was a whole section of RSAC dedicated to the topic of GDPR (lots of good content available on RSAC onDemand). And you know you’ve arrived when you have your own Dummies guide. If you want a quick primer, take a read of this.

The General Data Protection Regulation is a substantial overhaul of the data protection laws in the European Union, designed to ensure data privacy and enhance control of personal data for EU residents in the brave new digital world of today. GDPR essentially changes how personal data can be used. Personal data, a complex category of information under GDPR, broadly means a piece of information that can be used to identify a person. Privacy compliance is important nowadays especially with the digital landscape to which practically everyone is linked to, and businesses may wish to look to places like TerraTrue to ensure that they are protecting sensitive and/or important data correctly in line with GDPR. Online websites who do not have a privacy policy in place put their customers, clients, etc. at risk. A website such as TokenEx can provide a more detailed breakdown of what this means and how it is used.

GDPR sets out the rights of individuals (referred to as data subjects) and obligations placed on organizations covered by the regulation. These include allowing people to have easier access to the data companies hold about them, a clear responsibility for organizations to obtain the consent of people they collect and collate information about, and informing them about how it is being used. Under this new regulation, companies understand that they have a responsibility to uphold their customers’ privacy and data, and will look to GDPR consultants who specialize in this new data protection system, ensuring that their policies are up to date. You can learn more about GDPR consulting by visiting www.privacyhelper.co.uk/gdpr-consulting.

You See Where This Is Going

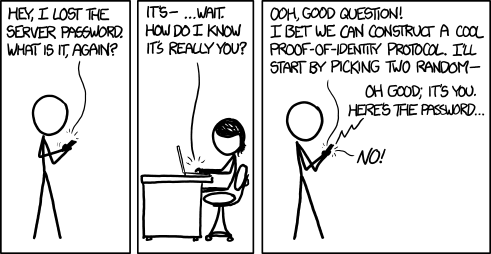

How do services that rely on network effects and signals across customers operate in the GDPR world? How does one reconcile GDPR controls with cross entity impact (the interaction of the data subject at organization A is impacted by their past and ongoing interactions with organization B, both of whom are customers of the same risk-based authentication service)? GDPR gives data subjects the right to require organizations to erase, correct or disclose their personal data held with them, and also restrict or stop the processing of their personal data. But how do you operationalize this when the person’s frame of reference is just organization A, and can their data even be untangled from the picture that the risk-based authentication service has built?

Privacy Is Not Dead, But Anonymity Might Be

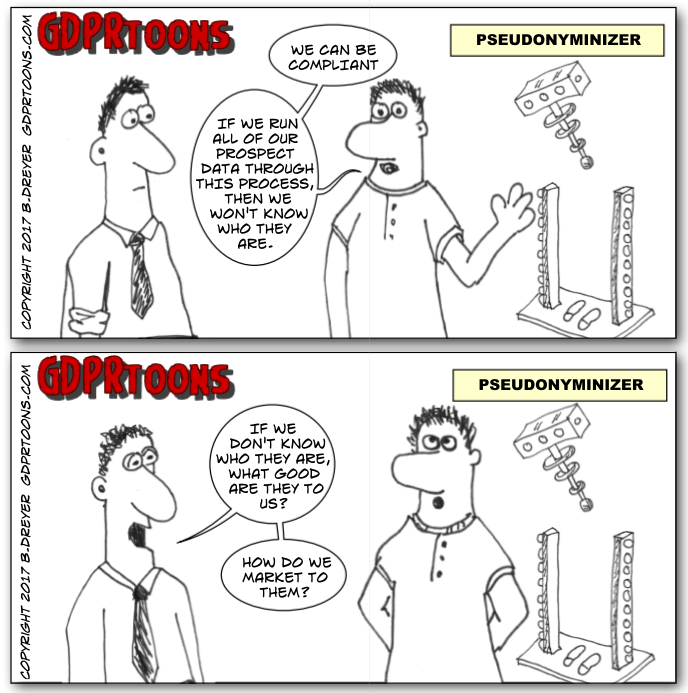

One answer that I’ve seen risk-based authentication services throw out there is that they are anonymizing and encrypting the data. Leaving aside the fact that anonymization has been shown time and time again to not work, the data they are typically referring to is what would be classified as personal data, like name and email address. However, GDPR explicitly goes further than current data protection laws to also cover pseudonymised personal data – if it’s possible to identify a person based on the pseudonym. This covers data like device identifiers, IP addresses, location, behavior, etc, and covers the exact function that risk-based authentication is claiming to accomplish – identifying to a reasonable degree of assurance the returning user based on this data. So it could reasonably be argued that GDPR effectively covers ALL the data that risk-based authentication services collect and collate.

I don’t have an answer here, nor do I claim to completely understand the complexity of this topic. If someone has an answer or can point me to something I missed, please share. I will definitely be looking to understand this next week at the European Identity Conference in Munich, where I will be talking about human-friendly security, a topic that is peripherally related to this.

An Opinion (Or Plea)

This topic also points to a great responsibility that lies with us Identity Professionals (no Steve, I’m still not calling myself an Attribute Professional, and I think this post perhaps exemplifies why). We’re ostensibly building systems and standards for good, to protect the people and businesses that are relying on us. I’ve talked in the past about the power of the Cloud and SaaS to create better security for all. But I don’t think it is irony, bad luck or blindness that is putting our objective of security for data, people and businesses at odds with their need for and these regulations about privacy.

Data is the new oil, but it is also the fuel that powers a lot of what we have been building. However we cannot turn a blind eye to how it can be weaponized. The topic of weaponization is one I’ve been spending a great deal of time studying over the past year now. We see it in action every day, whether it is determining people’s place in society, or determining whether they qualify for loans. Issues of bias in algorithms are a huge concern, as we’ve seen in how biometrics overwhelmingly disenfranchise minorities. And the rush to add “AI” to everything is an incredible danger, because all too often the people throwing it into their products are doing it for the buzz without understanding how or why it works when it does, and why it excludes when it doesn’t (don’t get me started on folks who are simply rebranding rules engines as AI).

GDPR asks us to be accountable. That’s an important thing for us to remember.