Fed-Prov and the Cloud: JIT Provisioning.Next

In my last post, I discussed the basic architectural model of Just-In-Time Provisioning, and some challenges it has in addressing enterprise needs related to cloud computing. In this post, I will propose some possible enhancements to the basic architecture that could address those challenges. Each of these solutions could be viable, though each seems to have its pros and cons that makes them optimal for different situations.

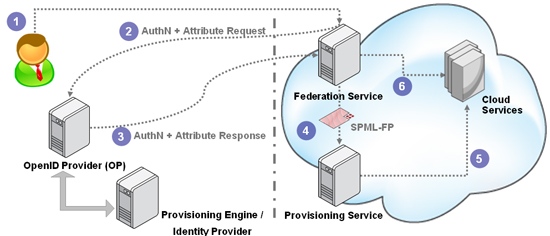

Option 1: OpenID Attribute Exchange

Some view provisioning as being little more than an attribute exchange. So it is natural to consider OpenID Attribute Exchange, which allows the federation service to request additional attributes from the OpenID Provider during the authentication flow. Essentially, when the federation service detects that the user doesn’t have an account, it could validate the claims it received as part of the token, and if it needs additional data, then it could add a request for those to its authentication request.

This can solve the data retrieval challenge, and squarely positions OpenID as a JIT Provisioning protocol. But the componentized architecture we have been assuming does face some other problems that it must solve in the enterprise cloud context. These are not problems with OpenID itself, rather with the overall architecture (again, this disappears when all 3 components are combined into a single service application, which is how OpenID-based RPs are able to do this today).

As discussed previously, when the SP is hosting more than one service, you often find that the attributes needed for provisioning depend on which service the user is trying to get access to. This means that the federation service would need to ask the OP for different attributes depending on which cloud service the user is trying to reach. Since the federation service can no longer just work off a static list of attributes that it should always query for, this adds the need for the federation service to able to ask the provisioning service for the list of attributes it needs, in the context of the specific service being provisioned. While the SchemaRequest operation in SPML could be used here, there needs to be a way to differentiate (in a standard way) the complete schema supported for the target by the provisioning system from that subset needed to create an account.

Another challenge created is for subsequent first interactions of the user with the other services hosted at the same SP. Since the provisioning system already knows the user, it already has some of the attributes it needs, but not all. So when the federation service queries it for which attributes it needs to retrieve, it should reply with just those attributes it doesn’t already have (from provisioning the user to a different service). The SchemaRequest operation cannot handle this scenario currently.

The bigger enterprise challenge is how the work on the OP side can be broken up between the OP (federation service) and the provisioning engine (policy and GRC service).

These are minor challenges to be sure (since you can always just get the full schema and update attributes that have changed to maintain consistency), but ones that become important when the flows are examined for compliance and consistency.

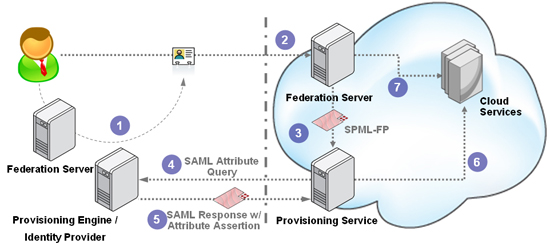

Option 2: SAML Attribute Query

In the last post, I mentioned how SAML (with the SSO Profile) and OpenID are both squarely positioned to handle the majority of the basic JIT Provisioning use cases. Good thing is, the SAML folks have been thinking about the attribute exchange problem as well, and in the spec have defined a mechanism to handle this called the SAML Attribute Query, which takes a different approach from the OpenID solution. The query for attributes in this case can go over what they call a back-channel. This can be leveraged to facilitate an attribute exchange between the Provisioning Services on each side of the federation boundary.

The big advantage of this model is that the front-channel (usually the browser, but could be other environments much harder to manipulate) is not getting overloaded with the data retrieval task. Also, since the two provisioning systems are talking to each other, they are fully aware of what is going on and can enforce standard provisioning policies as well as track and audit the happenings on the other side – major considerations in the enterprise space.

However, this does mean that it isn’t truly on-the-fly, since the SAML spec would require that a trust relationship be defined between the two sides ahead of time. There is actually a lot of interesting work being discussed right now in the SSTC that could directly influence fed-prov use cases, so I would encourage folks to keep an eye on that.

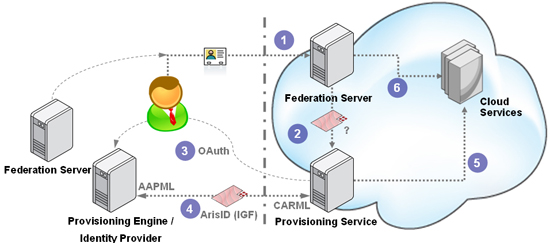

Option 3: OAuth + ArisID (IGF)

Last (but not least) is a possible solution that I first contemplated on my blog a few months ago, and have since been noodling over with other folks, and that is the thought of leveraging two emerging powerhouses – OAuth and the Identity Governance Framework. The idea here is very simple. When the user first goes to the SP, the SP can initiate the creation of an OAuth connection with the enterprise provisioning engine, facilitated by the user, of course (this is, after all, a user-centric protocol). The enterprise, for its part, can put in place policies and risk-based controls that would allow it to trust such a connection. With the connection between the parties established, the SP provisioning service can now use the ArisID APIs being defined as part of the IGF work to retrieve the data it needs. IGF adds a whole policy layer here, since the SP will provide a CARML declaration regarding itself (for instance, including details of its SAS 70 certification), the attributes it needs, and how it intends to use them (emailing user policies, storage policies, etc). The enterprise provisioning engine for its part can evaluate the CARML file and publish it’s own AAPML file with its policies.

One of the interesting things about this approach is that it enables the creation of on-the-fly trust between the two sides. The enterprise may never have dealt with this SP before, but can still interact with it with a certain level of trust. This trust is built on two separate components – the assertion from the user itself asking that provisioning take place (OAuth flow), and the CARML file declarations (IGF flow) – that make the creation of the federation a risk-based decision (automate-able) as opposed to a business decision (manual). Since this model also involves the provisioning engines on both sides, the security and policy controls can be enforced.

Still Work To Be Done

These models obviously need to be explored and poked at in depth to determine if they hold. And while these depend on some standards work that is still to be baked, there is a lot of other standards work happening (in particular in the OpenID and OAuth arenas) that could supplant these options completely.

And there are major lifecycle management issues still to be discussed and explored. How does one handle de-provisioning in a JIT Provisioning environment? How can SPs that want to know about profile updates find out outside of the user interaction? And how do all those workflow and policy based controls that are present in Provisioning systems today fit into all of this? Well, I will be exploring some of this in my Burton Catalyst North America talk on “Beyond SPML: Access Provisioning in a Services World” in July. So be sure to check out that session if you’ll be there. In the meantime, please keep leave your comments and feedback here so we can keep the discussion going.

[Ends Part 4 of 4]

Great Overview of JIT Provisioning

I do miss a reference to Identitity Providers issuing Information Cards and RPs using the claims from those cards to do 'on the fly' provisioning and deprovisioning. Seems a more scalable way to me than setting up miriads of federation agreements using SAML and more secure than OpenID.

I do miss a reference to Identitity Providers issuing Information Cards and RPs using the claims from those cards to do 'on the fly' provisioning and deprovisioning. Seems a more scalable way to me than setting up miriads of federation agreements using SAML and more secure than OpenID.